Offensive Application Security

6 November 2025This article gives an introduction to ethical hacking and web application penetration testing, and how it differs from other types penetration tests. We cover the basic principles of penetration testing and a simplified model for pentesting methodology. It will highlight key aspects of a high-quality security review, where the penetration test plays a big part, and the importance for developers to embrace a hacker’s mindset (and vice-versa).

Penetration Testing

Penetration testing or “ethical hacking” is the art of testing an application or system to identify security weaknesses and vulnerabilities. This can be done with different levels of knowledge of the inner workings of the target. On one end of the spectrum we have so-called black box tests where only public information is available to the testers. The opposite of black box is called white box testing. In such scenarios the penetration testing team is given access to non-public information, such as source code, system documentation, etc. In white box testing scenarios it is also commonplace for testers to be in close contact with the development team and work together to improve the security posture of the team’s system.

Having the ability to tap into the team’s expertise in the domain and business specific details is very valuable to the testers and enable them to work more efficiently. A tight cooperation between the security experts and the development team also helps increase security awareness in the team.

In either case it is common for the penetration testers to access the system as if they were users. Using one or more valid accounts on the system the testers act like an attacker and attempt to identify and exploit vulnerabilities.

White box testing — often performed in close cooperation with the development team. Testers have access to all relevant information such as source code, documentation, etc.

Black box testing — tests performed without knowledge of inner-workings of a system

Gray box testing — some non-public information available such as partial systems documentation and architecture diagrams

Pontus Hanssen, Omegapoint

At Omegapoint we generally promote performing white box penetration tests, and fall back to grey or black box when necessary. A common scenario where white box testing is not feasible is when testing a third party vendor or service provider.

White box tests allow testers to move quickly and focus on finding and exploiting vulnerabilities and weaknesses instead of spending time on reconnaissance. Another way of looking at it is that a motivated attacker can spend months performing information gathering and for example phishing attacks in an attempt to gain access to non-public information.

Based on this fact and the Kerckhoffs’s principle, the security of your system should not be based on the assumption that source code is keept secret.

For systems without a public sign-up/registration we request user accounts as part of the test since a motivated attacker will be able to compromise an account through other attacks such as social engineering, credential stuffing or bribes.

It is of course crucial to verify the security of any authentication solution and the network protections, but in order to perform an in depth penetration test in a short period of time an efficient way of working is to start with access to accounts and networks. The focus is often not on verifying the security of e g Azure AD or firewalls.

The approach to penetration testing differs greatly depending on the system under test. Penetration tests targeting computer networks and operating systems are in general more tooling and scanner heavy as the penetration testing team search for and exploit known vulnerabilities and security misconfigurations.

This technique does not naturally translate to application security, especially not for web applications which is the main topic for this article. Web applications are almost exclusively bespoke, which makes the penetration testing approach for such systems more like research.

That being said, there are security researchers conducting penetration tests on networks and operating systems that find novel vulnerabilities and exploitation techniques. There are also some automated tools and scanners that are a useful part of every web application penetration testers’ arsenal.

Red teaming

In contrast to white box testing with a high level of interaction with the development team, red teaming is a sub-category of black box testing where testers (or red team) are given the element of surprise. During a red team engagement only a small group of people on the receiving end of the penetration test are made aware that a test is in progress.

The goal of a red team is to more realistically simulate how an actual attacker would target a system or network. The defending team, or blue team, is not given any advance warning of a red team and will treat it as a real intrusion.

It is not uncommon for red teaming engagements to also include testing physical defenses and allow for social engineering of employees and staff.

Compared to other types of penetration tests, this type of engagement requires a higher level of stealth from the attackers to remain under the radar of the blue team. It is therefore not uncommon for red teaming exercises to be conducted over a longer period of time where a lot of time is spent on reconnaissance and planning.

A red team engagement tests both the effectiveness of the security controls put in place to protect a system and the blue team’s ability to prevent, detect and stop attacks. It stands to reason that unless you already have security operations center (SOC) or detection and response team (DART) similar capabilities within your organization up and running, the value of a red team test is limited compared to a white box test.

Tobias Ahnoff, Omegapoint

We recommend working continuously with white box penetration testing. And as the organization’s security awareness and processes mature, consider adding red team exercises as a complement.

Christian Wallin, Basalt

This enables comprehensive research of the security exposure, and at the same time the blue team receives training in real world attacks scenarios. Red team exercises are firmly recommended if an organization’s threat landscape includes nation state actors, which then suggest further validation of the security posture in order to cope with the added complexity of the tactics, techniques, and procedures that may be enforced.

If you want to read more about red teaming, we recommend this blog post (in Swedish) https://www.basalt.se/news/vad-ar-ett-red-team-del-1/.

Just like there’s gray box testing as a middle ground between black and white box testing there a similar term for red and blue teams working together, usually called purple team. In a purple team setup the element of surprise is sacrificed for a rapid feedback loop. In such scenarios the attackers can launch attacks repeatedly while the defenders setup detection software and fine-tune it to increase detection rates.

Penetration test methodology

A lot of people has written a lot of text regarding penetration test methodology. Those interested in a theoretical deep-dive in such a topic can read more at for example https://owasp.org/www-project-web-security-testing-guide/stable/.

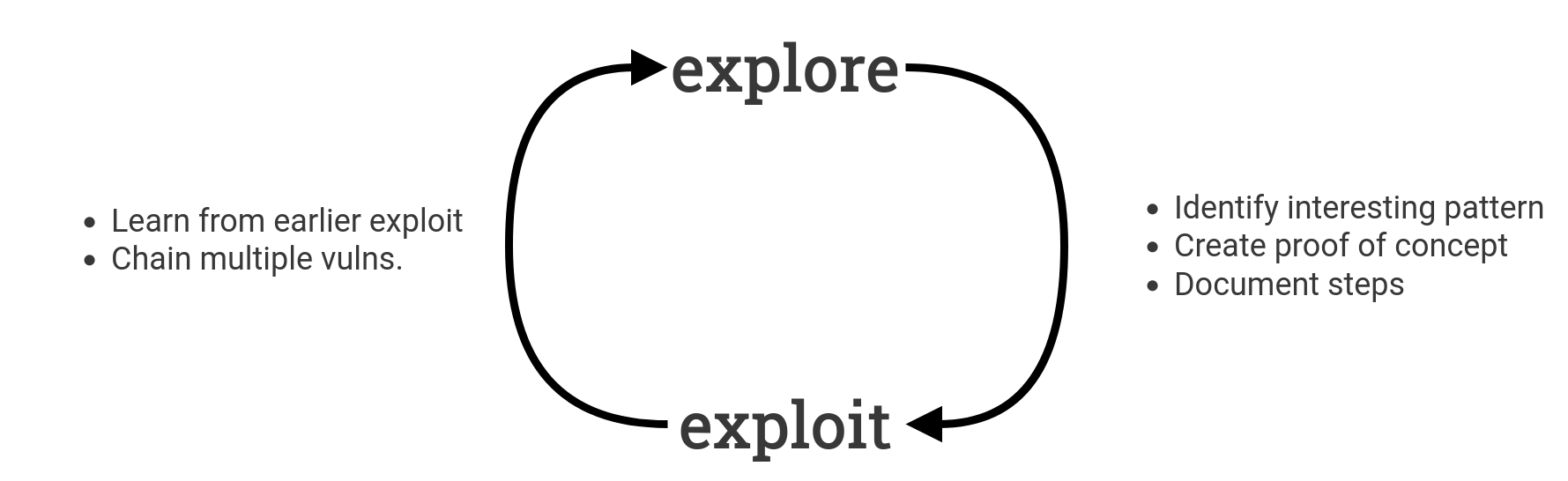

The image below shows a simplified model, that we’ll use for the purpose of this article.

As mentioned earlier, penetration testing a web application requires a large part of exploration and manual testing because of the custom and tailor-made nature of most web applications, compared to network penetration tests.

When a team of security experts start a new penetration test, the first thing they do is gather information. Such information may come from the startup meeting or source code (if available), but most of it is gathered from the application itself. The first step is simply to start using the application, just as a user would. Experimenting with different data flows and functions to try different features of the application.

Pontus Hanssen, Omegapoint

When testing a web application you start by finding ways that you can impact the state/response of a request. Basically we look for any type of dynamic content such as search results or user created content.

Dynamic components that handle user input are more prone to contain vulnerabilities. It’s simply difficult to introduce vulnerabilities in a static HTML page without any JavaScript.

As interesting patterns are uncovered and vulnerabilities are discovered we move seamlessly to the exploitation phase where a minimal proof of concept (PoC) is created. This usually includes backtracking a bit and figuring out the exact steps (and performed by who) that are required to trigger a vulnerability.

The PoC is documented, along with a preliminary risk score. High-severity vulnerabilities should always be reported to the customer in advance.

Found vulnerabilities are used as input for further exploration. If we have found injection issues in one part of the application we might be able find more occurrences of it. It might also be possible to combine multiple low or medium vulnerabilities and weaknesses into an attack-chain with a greater combined risk score than its parts.

Example

A “medium” reflected XSS vulnerability may be chained with a low-risk misconfigured OAuth2 weakness to create a one-click account takeover vulnerability with a critical risk score. Such as in this write-up by Frans Rosén https://hackerone.com/reports/1567186

Vulnerabilities and Weaknesses

Even if the way of working may differ between different types of penetration tests, the goal of the simulated attackers are to somehow use/abuse a system to gain access to information or functionality that they’re not supposed to be able to.

This access might be to read confidential information, to modify information that is later consumed by another entity that they’re not allowed to modify (integrity), or to influence the availability of certain information or functionality so that it’s not available to other users and entities when it should be.

When a way to break the constraints of the application in such a way is found it is called a vulnerability. A vulnerability can be exploited by an attacker to affect a system or application’s confidentiality, integrity or availability.

In white box testing scenarios which include things like source code analysis and a review of development and operation processes it is also possible to identify weaknesses that can be either process-oriented or of technical nature.

A weakness is a weak pattern that can’t be directly exploited by an attacker but is a weak pattern or process that increase the risk of introducing vulnerabilities in the future.

Vulnerability — a security issue that can be exploited by an attacker

Weakness — a weak pattern or process that can increase the risk of introducing vulnerabilities

Pontus Hanssen, Omegapoint

An example of a technical weakness is “opt-in authentication”, where developers must mark every non-public endpoint with

[RequireAuthentication]or similar. It is easy to make mistakes and forgetting such an attribute in an API with hundreds of endpoints. A better solution is to enforce authentication for all endpoints and allowing developers to mark individual endpoints as public in an “opt-out” approach. This reduces the risk in inadvertently exposing a private endpoint.

Tobias Ahnoff, Omegapoint

An example of a process weakness is the absence of a well-defined or incomplete off-boarding process that covers how to handle a developer leaving the team or organization. Having a documented process and making a habit of following it makes it harder to forget to for example rotate shared secrets such as database credentials whenever a team member leaves.

The industry standard for scoring the risk-level of vulnerabilities is Common Vulnerability Scoring System — CVSS 3.1, which in its base score weigh together the exploitability and impact of a vulnerability to generate a score between 0.0 and 10.0. This model works best for vulnerabilities but is hard to apply for weaknesses.

The OWASP Risk Rating Calculator can be used for scoring both vulnerabilities and weaknesses since it better captures the factors surrounding non-exploitable security issues. The calculator is based on OWASP’s Risk Rating Methodology.

Tobias Ahnoff, Omegapoint

Tools like CVSS and OWASP Risk Rating can be used as guidance, but we must always consider input from the business. A high-risk finding according to CVSS might be a considered “low” by stakeholders and vice-versa. We always encourage the team to evaluate risk level estimates made during security reviews.

A hacker’s mindset

The productivity of a development team is commonly measured based on how many user stories and functionality they can implement during a time period such as a sprint or program increment. Measuring quality and security is much harder — how do you calculate the number of bugs or vulnerabilities not implemented by the team?

One reason why vulnerabilities like broken object-level access control is so prevalent is because developers are focused on functional requirements, getting things to work, and not necessarily focusing on “how it should not work”.

For example, if you implement two endpoints:

GET /api/invoiceswhich returns a list of invoice IDs for your user, andGET /api/invoices/{id}which return (sensitive) details of an invoice

A naive developer might never consider the possibility of someone entering an id into the second endpoint which they

have not received earlier through the list endpoint. Why? Because that’s how the UI is implemented and that’s how the

app should be used.

The problem is that an attacker is not restricted to using the UI/web frontend when interacting with an application.

A very common tool in any web application penetration tester’s arsenal is the man-in-the-middle proxy. The two most common are Burp Suite and OWASP ZAP. By pointing their web browsers traffic through the proxy testers can easily inspect, resend and modify requests. Most tools also include additional features like automatic scanning for known vulnerabilities and spiders for crawling a site.

This allows penetration testers to better analyze and get an understanding of how an application works “under-the-hood”. After using the application for a while the tester sees the following requests in their proxy log:

GET /api/invoices/3456

GET /api/invoices/3457

GET /api/invoices/3458

Given that they only have three invoices connected to their account this seems reasonable. But, what happens if we try

to request /api/invoices/3455?.

Having a background as a developer or having read a lot of source code for many different project is definitely a bonus since it helps imagine how mistakes are introduced.

Let’s have a look at the implementation of the endpoint.

using Microsoft.AspNetCore.Mvc;

namespace Defence.In.Depth.Controllers;

[Route("/api/invoices")]

public class InvoiceController: ControllerBase

{

private readonly IInvoiceService invoiceService;

public InvoicesController(IinvoiceService invoiceService)

{

this.invoiceService = invoiceService;

}

[HttpGet("{id}")]

public ActionResult<string> GetById([FromRoute] string id)

{

if (string.IsNullOrEmpty(id) || id.Length > 10 || !id.All(char.IsLetterOrDigit))

{

return BadRequest("Parameter id is not well formed");

}

var canRead = User.HasClaim(c => c.Type == "urn:permissions:invoices:read" && c.Value == "true");

if (!canRead)

{

return Forbid();

}

var invoice = invoiceService.GetInvoiceById(id);

return Ok(invoice);

}

}

There are multiple steps that need to be taken when authorizing a request.

- ✔ is authenticated

- ✔ is allowed to perform the operation requested

- 𐄂 is allowed to perform the operation on the data specified

The last (but crucial) step is often overlooked and leads to Broken Object-Level Access control (BOLA) vulnerabilities. This is also commonly referred to as Insecure Direct Object Reference (IDOR). In this case it enables a user to access the invoices of other users.

While BOLA vulnerabilities are common, it’s far from the only type of vulnerability. Different vulnerabilities can be exploited using different methods. A good starting point for learning more on common vulnerability types is OWASP Top 10.

For a secure implementation that address the vulnerability types covered by OWASP Top 10, see our article on Secure APIs by design.

When performing web application penetration tests it is very common to search for ways to use (abuse) an applications functionality and extend it beyond its intended use. In other words to find scenarios where an application is used against itself to compromise the confidentiality, integrity or availability of itself. If these issues were found during development of QA they would most likely just be classified as “bugs” instead of vulnerabilities. These issues might be the cause of faulty of uncertain requirements. Some examples are improper access control, server-side request forgery and broken business rules.

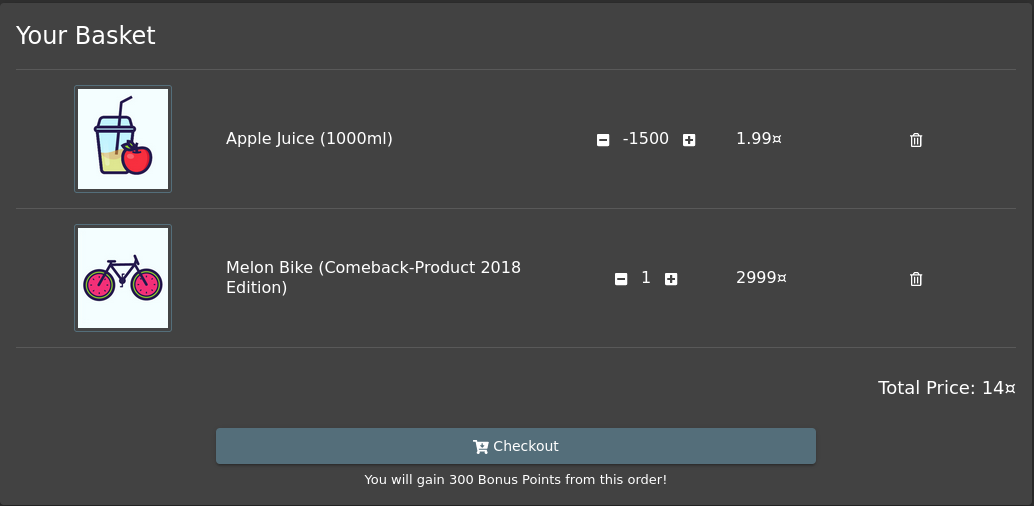

A not too uncommon issue for online stores is that it’s possible to add a negative number of an article to your cart. By filling their cart with for example -100 post cards á 10 SEK an attacker can add a single 1000 SEK item to the cart and have the total come out to 0. Depending on the backoffice processes of the store such an order could be treated as for example a return plus a purchase.

The screenshot shows an example of such a vulnerability present in the (intentionally vulnerable) security education application OWASP Juice Shop.

Pontus Hanssen, Omegapoint

Finding these kinds of vulnerabilities require an understanding of the application’s domain which is why it is very beneficial to be in communication with the development team during a penetration test.

The developers are the experts in their domain!

We want emphasize the important of security-focused tests, both manual and automated. See our article Test driven application security.

There are also some vulnerabilities that are more related “odd” or complex behavior of web browsers. While the most kinds of vulnerabilities requires a large portion on curiosity and asking “what would happen if I do X?” this class requires a much more technical in-depth knowledge of web browsers.

The web browser is one of the most complex pieces of software that we use and has in recent years become the application which runs all other applications. Web standards are constantly evolving to provide a better user experience and make it easier for developers to create feature-rich applications. But, even though the way we develop web applications are changing the internet is full of existing applications that will not be updated to follow the latest trends and standards.

Take for example the official web site for the 1996 live action/animated sports comedy Space Jam. The site is still live at https://www.spacejam.com/1996/, practically unchanged since it was first published, and still renders perfectly in a modern web browser almost 30 years later.

This (some would say extreme) level of backwards-compatibility is why issues like Cross-Site Request Forgery (CSRF), Cross-site Scripting (XSS) and Click-jacking are still problems today.

We find that many developers struggle with understanding how web browsers work in respect to security and defenses like same-origin policy. Our defense in depth article on web browsers covers this in more detail.

In conclusion, a hacker’s mindset requires a mix of curiosity and technical knowledge to identify and address web application vulnerabilities. With the increasing complexity of web browsers and evolving web standards, it is essential to stay up-to-date and it helps a lot to work closely with development teams.

In contrast, the developers mindset is often focused on making things work, sometimes to a fault. We believe developers can benefit by asking themselves “how can this be abused by an attacker?” more often. At the same time penetration testers should ask “which corners would I cut if I were short for time or lacked security training when implementing this feature?”.

Security review process and timeline

While penetration tests are a great tool for identifying vulnerabilities, spreading knowledge and increasing security awareness within a team or organization on a technical plane it’s not the only tool. Many organizations adopt standards for information security and secure application development such as ISO-27k, CIS Controls and OWASP ASVS. The standards define controls to help organizations implement processes and ways of working for building more secure systems over time.

When performing white box tests in close contact with the development team it is possible to include the penetration test in a larger security review context. A security review typically includes activities such as performing a gap analysis against a security baseline that is based on such standards.

Security reviews by Omegapoint

The exact process and activities that make up a security review will depend on the scope and who performs it. To given an example, the following section describes how we work at Omegapoint.

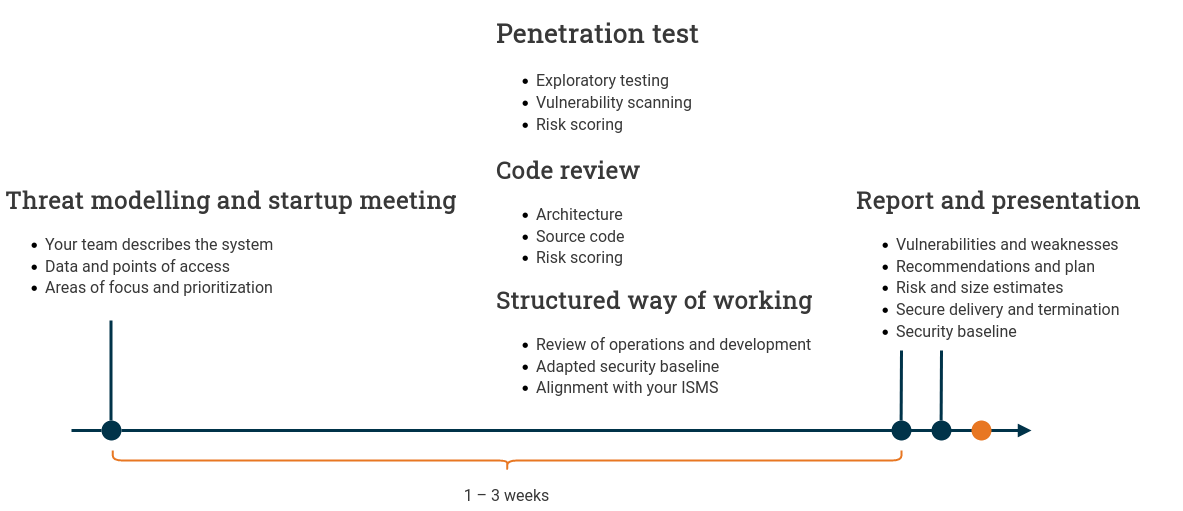

This way of working is based on our experience performing penetration tests and security reviews that are technology focused and build on three pillars.

An offensive penetration test, a defense-oriented system review and assistance with defining and adopting a structured way of working for securing systems over time. The typical security review assignment includes a penetration test and, depending on the agreement one or both of the other two parts.

The system review focuses on architecture, design principles, operations and maintenance of a system and includes source code review and in-depth interviews with development team representatives. The result of a system review is commonly a set of weaknesses that may be technical or process oriented in nature but constitutes a risk to the system.

As part of a security review Omegapoint can help bridge the gap between an ISMS such as CIS Controls and the development team by creating a security baseline which includes checks and controls that are relevant and applicable to the team. The baseline can be used to measure a team’s security posture over time. Having a tailored security baseline will help the development team focus on improving security where they can have the biggest impact.

All reviews begin with a startup meeting, which includes a threat modelling exercise for the given scope. Where security experts from Omegapoint, together with a team from the customer organization (ideally with representatives from the development team), look at the system and identify data to protect, available endpoints and how access control works. Together, they also identify any external integrations of interest.

This first meeting also covers specific areas of focus and design patterns used by the system. Our long experience in security reviews, application development, operations and maintenance enable us to identify strengths and weaknesses in architecture and patterns at this early stage.

The meeting ends when all practical details required to start testing are agreed upon. This includes, knowledge of in-scope assets, user accounts present, etc.

Tobias Ahnoff, Omegapoint

The size and therefore scope of a review is important. For large organizations and systems we recommend doing multiple smaller reviews to touch upon all critical parts of the organization rather than digging deep in just one application.

Apart from finding vulnerabilities and weaknesses, an important benefit of performing security reviews is an increased awareness and motivation when it comes to application security.

In our experience, there’s no better way to raise awareness in a team than doing a penetration test of a system they develop and operate.

Depending on the agreement, the review is performed as a combination of the three activities: penetration test, system review and structured way of working. These activities are performed in parallel. This way of working with a combined architecture/code review and penetration test gives the security experts better opportunities to find weak patterns and spend more time focusing on essential details than an automated penetration test.

Björn Larsson, Omegapoint

A strength of the simultaneous offensive penetration test and defensive system review is when the different perspectives meet in their review of a problem. This allows our team to quickly explore the full extent of a vulnerability, or to find points where a weak pattern repeats itself, leading us to being able to identify more vulnerabilities.

The typical review is two weeks, but can vary depending on the scope and agreement.

High-severity findings are reported to the customer ahead of time, before the final report is presented so that the team can start working on mitigations immediately. This way, it’s possible to offer patch verification for any mitigations put in place during the test.

The review concludes with a written report, and a meeting where both parties together process the weaknesses and vulnerabilities found during the review. This meeting is an opportunity to ask questions, discuss solutions and spread security awareness. It can also serve as a handover between the security experts and the development team, so that the development team can seamlessly continue the security work that has begun with the review.

Pontus Hanssen, Omegapoint

The report is, by nature, negatively loaded since the focus of it is to identify problems, weaknesses and vulnerabilities of an application. There’s generally no room for writing about all the great things a development team do. We focus on involving the development team during the test and share findings with them as we find them. Having an ongoing dialogue serves two purposes:

- There’s less of a “cold shower” moment when the report is received since everything in it is already known

- The developers can be part of the discussions and generally have very good ideas and insights into where to look for the next vulnerability. After all, they are the experts in their domain.

As part of the termination of a security review the security experts remove or destroy all materials, including the report that have been gathered and produced during the review.

Omegapoint does not retain any sensitive information after a completed security review.

While the result of a security review is a written report, it is crucial that the work does not end here. The development team must understand and analyze the findings. The analysis usually results in the creation of product backlog items that can be planned for future releases.

Martin Altenstedt, Omegapoint

For reviews including help with a structured way of working, it is common for Omegapoint to assist with prioritization and planning when identifying future work based on the review findings.

We recommend working continuously with penetration testing. How often, depends on the organization an security requirements.

We also recommend having time and resources to address the findings. It is very unfortunate if the investment in a security review ends with a report that has no effect on the production environment.

Other types of ethical hacking

A trend that’s been growing steadily the last couple of years is companies and organizations hosting so-called bug bounty programs or coordinated vulnerability disclosure programs. While this is a large topic and probably deserves its own article we’ll cover the basics here.

One of the core concepts behind bug bounty and CVD programs are a “safe haven”, i e that a system owner allows security researchers to perform research on their systems without pursuing legal action. This permission is usually granted with some requirements on the researchers, usually things like “do not perform denial of service attacks” and “do not exploit a vulnerability further than necessary to show impact”.

See https://responsibledisclosure.nl/en/ for a good example of a policy.

Some organizations may choose to award valid reports with monetary rewards (a bug bounty), swag or other types of appreciation. The Dutch government is famous for sending researchers a black t-shirt with the text “I hacked the dutch government and all I got was this lousy t-shirt”.

There are bug bounty platforms that help organizations and business connect with security researchers and can help with bug triage and administration of a program. Many platforms also invest heavily in the researcher community to make researcher feel welcome.

There are many bug bounty platforms, each with their pros and cons. Here are some examples.

Some organizations such as Google and Microsoft host their own bug bounty platforms.

How to set up your own bug bounty program or vulnerability disclosure policy and things to consider is probably a topic worthy of its own article. It might for example be a good idea to perform a penetration test to eliminate low-hanging fruit to limit the amount of duplicate reports and burden put on your security team in terms of triage.

You don’t have to be an expert security researcher to start bug bounty hunting and many of the large platforms have excellent resources for helping you get started.

Some organizations host their own program by simply publishing a coordinated vulnerability disclosure policy on their

website, or uploading a security.txt.

security.txtis a proposed IETF standard to aid responsible vulnerability disclosure that has is rapidly gaining adoption. Read more about it at https://securitytxt.org/.Our

security.txtcan be found on the standard location https://securityblog.omegapoint.se/.well-known/security.txt.

Getting started with ethical hacking

Not everyone has the privilege of working as a penetration tester and don’t hack systems and devices all day long. If you are interested in getting started with ethical hacking and penetration testing there are tons of great (free) resources that help you getting started.

One place to start is at a bug bounty program as listed above, they usually have great resources for new researchers starting out. We can also recommend Portswigger’s (the company behind Burp suite) web security academy. It’s a collection of free blog posts, articles, cheat sheets and a lab environment for learning about web application security. You can find it here: https://portswigger.net/web-security.

OWASP have a number of fantastic projects as well. Be sure to check out:

- Juice Shop — an intentionally vulnerable web application that you can self-host. There are official write-ups of all vulnerabilities to guide you along.

- OWASP Top 10 — the most common web application vulnerability types right now.

- OWASP Top 10 for API security — the most common API vulnerability types.

- OWASP ASVS — technical web application security controls. Useful to both builders and breakers

Want to learn more?

Please contact us to learn more about this and how we work with penetration tests and security reviews at Omegapoint. For information on how to reach us, see the Contact page.

If you are interested in defensive application security we recommend reading our article series Defense in Depth.

For more reading on offensive security we recommend reading our writeups. They were documented, as part of responsible disclosure in cooperation with affected parties and show how vulnerabilities were identified and how they could be exploited (before patching).

Writeup: AWS API Gateway header smuggling and cache confusion— Issues with AWS API Gateway Authorizers

Writeup: Keycloak open redirect (CVE-2023-6927) — How to steal access tokens in Keycloak < 23.0.4

Writeup: Stored XSS in Apache Syncope (CVE-2024-45031) — Privilege escalation between IAM portals

Writeup: Account Takeover in Authentik due to Insecure Redirect URIs (CVE-2024-52289) — Redirect URI validation using RegEx

Writeup: Exploiting TruffleHog v3 — Bending a Security Tool to Steal Secrets

Writeup: Leaked JWT Tokens as Part of the Curity HAAPI Authorization Flow — Shared JWT key may allow unauthorized access to misconfigured APIs

Writeup: Subreport Remote Code Execution in Stimulsoft Reports (CVE-2025-50571) — Remote Code Execution using Reporting Library